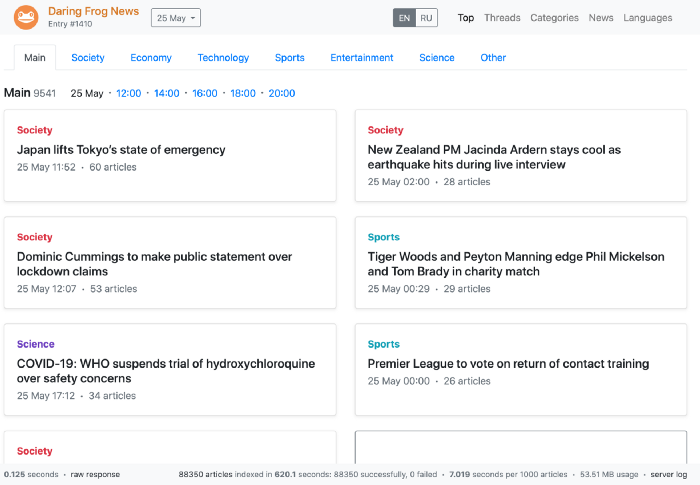

Recently Telegram ran a Data Clustering competition where participants were asked to build a prototype of a news aggregation service, similar to services like Google News and Yandex.News. I finished second in this contest (nickname: Daring Frog) using nothing but Python, so decided to share details of my solution in case if someone finds it helpful. You can check out scores per task (thanks to Mindful Kitten), speed comparison, final leaderboard, code, and play with the live app which looks like this:

Live app, available at: https://entry1410-dcround2.usercontent.dev/20200525/en/

🎯 The Task

Full description is available on the contest page (I recommend glancing over it before reading this post). In a nutshell the task was to produce a binary executable which can perform the following:

🗃 Part 1 — Offline Batch Processing

- Traverse an input directory and parse all HTML files (articles) in it with news content

- Detect article language and filter out articles which are not in English or Russian

- Classify articles into one or several of 7 categories:

society,economy,technology,sports,entertainment,science,other - Filter out articles which are not news (e.g. how-tos, tips, encyclopedic content)

- Group articles into threads. Thread is just a collection of articles about the same event

- Sort articles within a thread by relevance

- Sort threads by importance

📡 Part 2 — Online Processing with HTTP server

- Spin up the HTTP server, load its previous state if one exists

- Index incoming articles

- Update existing articles

- Delete existing articles

- Return top article threads, with optional filters by language, category, and time range

Apart from these requirements, Telegram highlighted that “speed is of utmost importance”, asked for binary sizes of 200Mb or less, and required the binary to execute on a standalone 8-core 16Gb RAM Debian GNU/Linux 10.1 machine without a GPU. On top of that it was not possible to test or launch the app on Telegram side prior to submission, so contestants basically had to ensure flawless reproducibility. These requirements were the main factors behind my preference for the simplicity of the algorithms and libraries I used.

✅ Solution

Disclaimer: as the contest lasted only 2 weeks, there are numerous oversights and areas for improvement in this solution. I list some of them in Appendix A.

🗃 Part 1 —Offline Batch Processing

1. Preprocessing. Input HTML is read as plaintext. Metatags for the title, description, URL, and publication time are extracted using simple regexes. Basic cleanup is done for the title and description: stripping punctuation and special symbols, lowercasing (but not for acronyms), adding spaces around currency values (e.g. “100$” becomes “100 $”), capping max string length. Words are normalized using lemminflect (for EN) and OpenCorpora (for RU) to improve the quality of categorization and clustering (described below), as it helps to lower the vocabulary size and reduces overfitting. Original texts are kept and used together with cleaned up ones, depending on the task.

2. Language Detection. Language detection is performed using fastText (lid.176.bin) library. Language is set to UNK if fastText confidence score is below a given threshold. Additional heuristics (matching on certain symbols and popular phrases) are used for disambiguating corner cases for languages which fastText views as similar to Russian (UA, TG, BG).

Preprocessing and language detection are done jointly using 16 threads spun up via multiprocessing package as HTML files can be processed independently.

3. Category Classification. Category classification is done using a simple SGDClassifier from scikit-learn with modified_huber loss. Input to a model is a vector from TfidfVectorizer which is run on a concatenation of the article title, domain, and description, with max length of such text capped at 500 characters. TfidfVectorizer operates on uni- and bi-grams (with NLTK stopwords), uses sublinear TF scaling, min_df=3, max_df=0.95, and dtype=np.float32.

For this task a simple SGDClassifier appears to be a great fit:

- It captures vocabulary breadth very well, which is important since there are many category-specific terms in the world of news and it is often necessary to memorize them based on only a few available examples. E.g. TfidfVectorizer vocabulary size is 142K for RU and 70K for EN what would be considered large for a typical use case but works quite well for capturing the diversity of news.

- With a simple model like this it’s possible to avoid overfitting even while using such a large vocabulary and a small dataset.

- It is incredibly fast and supports sparse matrix input. Sparsity means we can 1) efficiently work with large vocab size without the need to pass TF-IDF outputs through the expensive SVD step and 2) there is no need to maintain massive embedding matrices which take up RAM, disk space, and lead to overparameterized models.

- It is easy to interpret and debug (one can easily trace model predictions all the way to the weights assigned to each vocabulary term).

I also considered dozens of other model types — see Appendix B for why they they were not chosen.

TfidfVectorizer and SGDClassifier are trained on datasets like Lenta.ru (RU), HuffPost (EN), TagMyNews (EN), News Aggregator Dataset (EN), BBC (EN), Webhose (EN), as well as manually crawled websites for news categories (e.g. technology, auto, weather) which suffered from label shortage in the aforementioned datasets. Training set was additionally enriched using unlabeled sample data provided by Telegram by auto-assigning category labels using article URL information: e.g. if /football/ is in URL of a reputable domain we can attribute it to sports category with very high confidence. Overall, while maintaining a reasonable distribution of examples across classes (roughly matching ratios expected at the inference time), I managed to get ~120K training examples for EN and 313K for RU. In the table below you can see the number and ratio of training examples per category:

4. News Filtering. News filtering is based on a set of heuristics which are simple rules based on the following features:

- Category classifier (described above) confidence score

- Number of tokens in the article title / description

- Presence of special characters

- Matching on pre-approved or pre-banned words, subword units, or lemmas

- Title matching certain pre-compiled regexes: how-to articles, bad phrases, bad title beginnings, lists/enumerations (e.g. “10 tips for…”), etc

- Match on a known bad title pattern. This is a little trick you can use to find templated (e.g. auto-generated) titles, essentially by converting a title to a code (e.g.

Music — Lounge. 27.03.2020.becomesW — W. ##.##.####.) and clustering such codes or just checking most frequent. This takes only a couple of lines of code:

You can see detailed rules in the inference script.

5. Thread Clustering. News items are clustered into threads using DBSCAN with the following settings: min_samples=2, eps=0.55, metric='cosine', which is run on TF-IDF vectors of article titles. TF-IDF vectors are produced using TfidfVectorizer with SentencePiece tokenization function. Just as for news classification, I set dtype=np.float32 for TF-IDF vectorizer to speed things up a bit. TfidfVectorizer and SentencePiece models are trained over all EN and RU sample articles provided by Telegram (~600K per language). SentencePiece model has a vocabulary size of 10K tokens. Additionally, for some tokens (such as geo-related) I boost TF-IDF scores to give them more importance in clustering (this e.g. forces similarly-titled news from unrelated cities to be forced into separate clusters).

6. Article Sorting within a Thread. Within clusters, articles are primarily sorted by relevance (computed as the sum of dot products with vectors of all other articles in the cluster). Secondary and tertiary sorting by domain PageRank and freshness, respectively, is performed as well. When sorting, I ensure that no pair of consecutive articles is from the same publisher, providing a bit of diversity to the feed. For naming the article thread I simply use the title of the top article in a sorted list. All clustering operations are performed on a single sparse matrix and therefore are fairly fast.

7. Thread Sorting. News threads are sorted simply by the product of the number of unique domains in the thread with the combined PageRank of these domains. And if this score is the same for two articles, additional sorting by the number of articles in a thread is performed. Thread category is defined simply as the most frequent category amongst articles in a thread. Domain PageRank was computed using sample articles provided by Telegram for all domains with at least 100 publications (~3K domains).

📡 Part 2 — Online Processing with HTTP server

1. Spin up the HTTP server. For handling HTTP requests a SimpleHTTPRequestHandler is used. Server responds with HTTP/1.1 503 Service Unavailable until resources (such as models, vectorizers, vocabularies, and previous index state) are loaded.

2. Index incoming articles. As articles arrive in a never-ending stream, we basically have to perform real-time clustering, as article threads may expand or change with time. From contest description it was not clear whether we can tolerate any delays on clustering, so I decided to go for the solution in which index and article clusters are always up-to-date. To do this I wrote a naive agglomerative clustering algorithm, similar to SLINK.

Step 0: Incoming article is preprocessed, vectorized, and categorized as described above in the offline section. In case if article is non-{EN,RU} or not a piece of news we save it as such and skip the following steps.

Step 1: For weaving articles into threads we operate on 16-hour intervals (buckets). For a given article its previous, current, and future buckets are fetched, i.e. a 48-hour window is considered (we assume that no article thread will span more than two full days). We need to look into the “future” since it’s possible that some articles arrive with a delay, e.g. if a crawler found an article from yesterday we might want to stitch it to a cluster from today.

Step 2: Article title tokens with IDF score below a given threshold are picked (i.e. we ignore terms which are too generic). For such tokens, in each of the buckets (past, present, and future) we perform an inverted index lookup to get a subset of previously indexed articles which share one or more terms with the current article. Inverted index is a simple lil_matrix where rows are terms IDs and columns are article IDs — so we can make a lookup by IDs of tokens from TF-IDF vector in order to get IDs of relevant articles, which then can be used to look up vectors (“embeddings”) of relevant articles in the main “embedding” matrix.

Step 3: Amongst these article candidates we find the closest article by dot product similarity computed on L2-normalized TF-IDF vectors. If we find an existing article similarity of which is above a given threshold we can assign our new article to the cluster with the existing article. If we don’t find an existing article which is similar enough we create a new cluster.

Step 4: L2-normalized TF-IDF vector for the new article is stacked on top of the existing CSR matrix with vectors for previously indexed articles in this time bucket. CSR matrix works great for this use case as it allows for fast multiplication, combines nicely with sparsity of TF-IDF vectorizer, allows for fast v-stacking and row deletes, and overall appears to be the most efficient sparse matrix for this use case. Note that each time bucket has its own CSR matrix what greatly speeds up the dot product (as we can ignore vectors for all other irrelevant time periods) and also ensures that index can scale linearly with time (if we grossly oversimplify and assume constant daily number of news).

Step 5: All other relevant properties for the inverted index and cluster info (max time, category, list of articles) are updated accordingly.

3. Update existing articles. Article update happens if any of its attributes changed and is carried out by simply deleting and indexing it again.

4. Delete existing articles. Article deletion is straightforward, as we simply wipe all info about this article. One potentially interesting bit here is that we want to delete a row from the “embedding” matrix (with normalized TF-IDF vectors) which isn’t something CSR matrix supports by default. Luckily we can perform efficient in-place deletes like so:

5. Return top article threads, with optional filters by language, category, and time range. This is straightforward, as we only need to iterate over relevant time buckets for a given language and return article threads which belong to a given category within a specified time range.

🛠 Part 3 — Building a Binary

Telegram required an executable binary file to be provided in the contest submission. There was also an additional requirement to keep binary size under 200Mb. Therefore I used a separate conda environment with a minimal set of libraries used (only fasttext, numpy, scipy, scikit-learn, sentencepiece). Using this environment it is trivial to create a lightweight binary executable by pointing pyinstaller to the inference Python script:

If you want to run the app on sample data you should be able to do this using a binary produced at this step by running a command like this (following the API described here): ./tgnews threads "/path/to/directory/with/articles".

Or just run it directly using a Python script, e.g.: python tgnews.py threads "/path/to/directory/with/articles".

Conclusion

In the end, my solution is just a combination of Python, fastText, SentencePiece, TF-IDF, SGDClassifier, and DBSCAN. I find it to be very simple and a bit hacky yet despite this, it has ended up being 2nd by quality and 3rd by execution speed (on par or faster than C++ solutions 🤷♂). This simplicity allowed me to do everything on my old MacBook Pro 2012 — something rarely possible in Kaggle-like contests.

There are many things which I would’ve loved to do differently and I list some of them below. Hope you’ve enjoyed reading this and learned something new. If you have any questions at all, please reach out to me on LinkedIn. I also highly recommend reading a couple of comprehensive overviews of the awesome solutions that ranked 1st and 3rd in the competition.

And of course I’d like to close by thanking organizers for putting this event together. It was a great bit of fun and I definitely learned a lot.

🚀 Appendix A. Potential Improvements

- Support for parallel requests. At the evaluation stage Telegram team was sending up to 100 parallel requests to the binary which is not something my submitted app supported. Thankfully they could still send requests in a single stream for the majority of the evaluation stages, yet this could not be done for the “Today” mode, so my app fully crashed there. I thought this would disqualify my submission altogether, but probably a strong performance in all other tasks helped with the final ranking.

- News Filtering should be based on a model and not a set of regex-based heuristics. One idea would be merging news filtering and categorization tasks, as some contestants did (1, 2), however there may be benefits in keeping them separate. In either case, there is a need for carefully labeled “not-news” examples, as this is a vaguely defined concept for which little-to-none public data is available.

- News Categorization model quite obviously lacks depth (i.e. it doesn’t make decisions based on “meaning” but rather bases them on memorizing certain term-category associations). If compute resources permit, it would be useful to combine breadth of the current model with the depth and non-linearities which neural nets bring. This could be done using Wide & Deep models, model ensembles, or other techniques.

- Pre-trained embeddings could be added across the whole pipeline (news filtering, categorization, clustering). I omitted them fully due to the 200Mb binary size requirement.

- Other article and publisher features should be used. Using only title, URL, and part of a description is extremely limiting.

- Current clustering based on exhaustive dot product search should be thrown away in favor of scalable high-performance ANN methods similar to ScaNN, HNSW, and FAISS. This will help support million- and billion-sized indices while keeping latency low.

- GPUs/TPUs should be leveraged, as well as high memory (RAM) machines to keep relevant parts of the index in memory.

- Creating a task-specific labeled dataset. It wouldn’t be too expensive to get 100K-1M labels, what should be enough for fine-tuning models trained on public datasets. If budget is a constraint, active learning will help. By not labeling sample articles I likely ran into training-serving skew and all sorts of other unwanted biases. Team from the 1st place labeled articles via raters which let them significantly outrank other submissions in the categorization task.

😢 Appendix B. What Didn’t Work

- HDBSCAN for batch offline clustering. Typically HDBSCAN is a go-to tool for clustering which scales extremely well and has very high-quality outputs. However here DBSCAN outperformed it in both quality and speed IIRC.

- BIRCH for streaming clustering (too slow).

- DenStream for streaming clustering (custom algorithm was more flexible).

- FAISS for streaming clustering (IIRC, it wasn’t much faster than well-optimized sparse matrix operations, especially at low data volumes).

- Using

float16(instead offloat32). Coming from GPU world I thought this is somethingscipyandnumpycan support as well, but unfortunately they don’t, asfloat16is not supported on CPU. - Other model types for news classification: I tried everything from

scikit-learnthat works with sparse inputs. No other model type could beat the quality and speed of the simpleSGDClassifier.ComplementNBwas close or slightly better in performance but lead to a larger binary file size. - Other frameworks for news classification (TF, Keras, PyTorch): I ruled them out from the very beginning in order to keep binary size small and inference fast. Adding TF to a standalone binary will push it way over 200Mb limit.

- Loading text files as

jsonorgzip. This is significantly slower than plain oldpickledue to the decompression and the need to parsejsonfile. - Bypassing news filtering for all articles from top publishers by PageRank. Idea was that maybe they never post non-news content. I was wrong, sometimes there are articles which would be classified as “not news” according to the task description.

- Using TF-IDF-weighted CBOW fastText embeddings for clustering (instead of sparse TF-IDF vectors). Usually you’ll find that TF-IDF-weighted embeddings (e.g. word2vec) is a very strong, fast, and simple baseline yet here it was slower and lead to more diluted clusters.

- EN inflections from WordNet. LemmInflect was a bit better quality-wise.

⚠️ Update (25.09.2020) ⚠️

Issues around “Today” mode have been resolved. Updated app supports up to 100 parallel requests and was significantly sped up by introducing incremental writes to disk, thread locks, and limiting input length. Now it can handle indexing of up to 200 news articles per second, regardless of the index size. Live app is available at https://1410.topnews.com/.

This post originally appeared on Medium.