We've designed our team structure, development processes, and technical architecture to promote strong team ownership and iteration velocity. HubSpot makes a growth stack platform (marketing, CRM, and customer success software) trusted by more than 64,000 customers to power their businesses. They rely on our SaaS platform to host their website, blog, landing pages and forms, collect web analytics, manage their contacts, store prospecting data, deliver marketing emails, and more. The capability is relatively broad -- each functional area has many companies that focus on just one vertical -- and is growing as we continue to introduce new products.

Our stack is not only a function of building B2B SaaS offerings, the company's stage and size, but also the cultural values that we want

reflected in our products and organization.

Small, autonomous teams are the core

Each team is typically comprised of a tech lead (TL) and two developers working with a product manager and designer. The teams are kept small to eliminate scale challenges and communication overhead (e.g. very few meetings). It also allows the tech lead to be deeply technical and product-focused as well as spend time coaching the two developers they work alongside. (There is a small number of people who support the TLs by focusing on organizational issues and structure.) This unit owns a functional part of the product (ex. "Social Media") and is chartered to make meaningful progress for its customers. (Around this team are others that provide services for user testing, data management, reliability monitoring, etc. to allow them to focus on solving customer's problems.) We have

a frontend team and a backend team for any given area. This structure is informed by many feedback loops: usability testing, direct customer interactions, usage tracking, customer support and other stakeholders. The

team decides what ideas to build, how best to implement them and own their ongoing operation. If something is broken they own fixing it -- there is no QA team to off-load responsibility to. If the user flow is confusing then they own iterating on it. When customers are excited about what they have shipped they get the kudos.

The overall result has been to allow for rapid progress and harness developer passion for improving customer's experience. Often as teams grow individual contributors are forced into people/project management - this structure allows for technical mentoring while minimizing the number of full-time people managers. The primary challenge in this model is driving design and technical consistency. Conway's Law poses an obstacle that requires teams to communicate effectively to avoid silos and diverging. There is a strong set of peer communities (ex. PMs, Java back-end developers) across product teams that work to be on the same page. Initiatives that are cross-cutting are sometimes harder and often lead to scenarios of "eventual consistency" where teams evolve toward a similar goal over time.

Microservices

The HubSpot products are comprised from 1200+ different web services, and dozens of static front-end apps. Together these microservices form the products our customers buy. Most web services are written in Java using the Dropwizard framework, and the front-end largely uses Backbone and React in CoffeeScript. A single team will likely own several se

rvices. The exact scope of a service ranges but having more than ~5 actively contributing developers can lead to coordination overhead. Services communicate through RESTful JSON APIs or leveraging a messaging system like Apache Kafka.

This architecture aligns well with having clear ownership and quick iterations. There are over 9,000 separately deployable units, that can be scaled independently. Each family of services is owned by a team so the appropriate people are notified of service problem. It has facilitated scaling the organization as there is a pattern for forming new teams and spinning up new services. There is additional complexity to understanding how a distributed architecture is performing and managing configuration complexity (ex. services using different versions of a shared library). For smaller teams this trade-off from a monolithic app (ex. Rails) may not be advantageous until a tipping point in the amount of code or number of developers.

Approaching continuous delivery

The goal of shipping frequently is first and foremost to increase our rate of learning. It allows for getting rapid feedback from users, data points on the correctness and scalability of the code, and deliver value to customers.

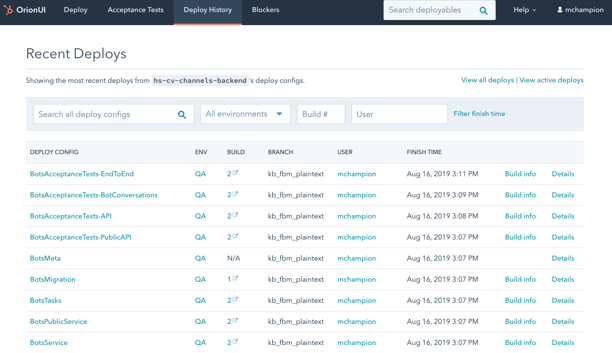

To allow small teams to focus on improving the product we've developed many internal tools that make shipping code simple and provide a safety net. Any commit to our hundreds of GitHub repos will trigger a build in Blazar, our open source build system that utilizes standard buildpacks for different frameworks (ex. Java Dropwizard, static apps, etc). Assuming all tests pass with a few clicks a web-based deployer puts that build on the relevant hosts. Initial deploys of a build are put on a shared QA environment which aims to mirror the production environment as much as possible (except in scale). Only those builds deployed to QA are eligible to be deployed to production. The goal is for a "Heroku-like" experience where developers have zero friction to pushing small changes frequently, and shielded from needing to know the exact steps being performed.

Deploys

Core to the goal of small, safe deploys is feature flags (or gates). As a feature develops over time it can be safely merged into primary behind a flag in stages. This separates deploying code from releasing the feature. The primary advantage is that the developer controls who sees the new functionality, while ensuring that it is technically sound in production. The typical order might progress from just the developer, to her team, to customers to a beta group, and then all customers. Depending on the scope of the feature that may happen within hours or weeks. At any time the feature can be hidden again without another deploy. No longer should developers have to wait weeks to merge branches, or pray when they deploy a large set of changes to all customers (especially on a Friday at 5pm). The downside is some additional code complexity -- effectively extra "if" statements -- and a clean-up task after a feature is fully released. It is a simple concept that has been around for years but still appears to be in less use than it deserves.

The only way to feel comfortable deploying frequently is to have insight into state of the running service. In addition to off-the-shelf tools like exception tracking and tracing tools we've invested in ensuring every service has built-in health checks with reasonable defaults. An internal project, dubbed "Rodan", instruments services to collect standard metrics (ex. requests/sec, server errors) as well as developer-defined ones. Each service is part of a family that has configurable alerts and PagerDuty integration to let teams set appropriate thresholds. It has struck a useful balance by having developers own their alerting rules, while avoiding swamping inboxes with email alerts.

Having a distributed architecture means that recording application metrics are often the best way to see what's truly happening. Our applications capture a lot of runtime metrics (via Dropwizard Metrics, the JVM, etc). To visualize and alert on those metrics HubSpot uses SignalFX. Each type of deployable has a set of standard metrics, as well as developers can create application specific metrics. We use additional tooling to analyze application traces.

Mesos, singularity and beyond

HubSpot has long been a heavy user of Amazon EC2 for server hosting. More recently we've taken advantage of Apache Mesos and we use Kubernetes to manage our large EC2 clusters. In conjunction, they have driven significantly higher density per server to reduce cost. Previously most services provisioned instances in isolation because coordination was too complicated. Even more importantly than cost changes has been the ability to insulate developers from details about any particular instance. Now the platform can handle problems like instance failures or availability-zone issues that previously required developer intervention. Mesos has been adopted by companies like OpenTable and Groupon but has required significant investment to see the benefits of this new platform. As we invest in our stack we look to solve challenges programatically with small developer teams, rather than larger numbers of people in specialized operations roles.

This stack has evolved considerably over time as HubSpot has grown, and we expect that to continue. In particular as we look to scale our existing products, and build completely new products there will be a series of significant challenges to solve. Hopefully the principles behind how we choose to build software will lead us as we tackle them.

Note: this post originally appeared on StackDive, covering the technology behind Boston startups.